Develop GenAI Strategy for your organization with AI Scientist, Omid Bakhshandeh

Engaging topics at a glance

-

00:14:45Key factors to consider while formulating LLM strategy

-

00:17:15What is a Foundational Model?

-

00:20:50Should companies train their own model or leverage existing models?

-

00:26:00Considerations when leveraging existing LLM model as a foundational model

-

00:29:30Open-source vs API based

-

00:39:50Time to Market

-

00:47:07Challenges when building own LLM

-

00:52:00Hybrid Model, a mid-way

-

00:54:20Conclusion

“Developing GenAI Strategy” with guest Omid Bakhshandeh, AI Scientist with a PhD in Artificial Intelligence, discusses how organizations can foray into adoption of GenAI.

Whether you are the company’s CEO or leading a business unit, if you’re asking yourself the question, should I develop an AI strategy? That’s the wrong question because today, we know that if you don’t have an AI strategy, the odds of you being successful in the next couple of years will diminish. So, the right question is, what is my AI strategy, and how fast can I deploy this strategy? To answer this question, large language models are at the heart of every company’s AI strategy. In a previous episode with Professor Anum Datta, we unpacked LLMs and explored what LLMs are. In this episode, that conversation was taken to the next level, and we discussed the key things you need to know about LLMs that’ll help you develop your company’s AI strategy.

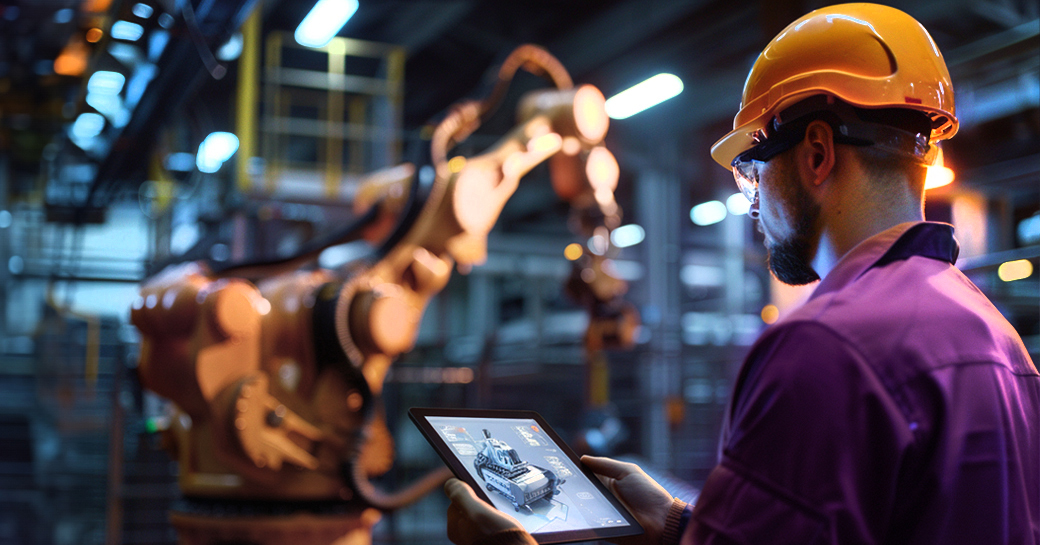

Looking at the current landscape of Large Language Models (LLMs), these LLMs capture vast amounts of knowledge and serve as repositories of knowledge that have given rise to foundational models. With this concept, there’s no need to initiate the training of an LLM from the ground up. Instead, existing LLMs available in the market, which have already encapsulated knowledge, can be harnessed and seamlessly integrated into applications. It is beneficial for companies in most cases to follow this strategy. The inherent trade-off pertains to the risk of foregoing the utilization of established LLMs, which could result in a delay in promptly reaching the market.

Building a cloud from scratch? Unlikely. Just as you leverage cloud providers’ tools, AI benefits from tools that sit on established structures.

– Omid Bakhshandeh

On the contrary, some companies, characterized by their possession of significant volumes of unique and customized data, may contemplate the development of proprietary foundational models and specific LLMs. This strategic manoeuvre facilitates the integration of such models into their respective industries and provides avenues for potential monetization opportunities.

The key for leaders is to pay close attention to the potential use cases, data, and the support system available when building the AI strategy.

Production Team

Arvind Ravishunkar, Ankit Pandey, Chandan Jha